When ChatGPT is better than your doctor

Evidence is emerging that ChatGPT can outperform doctors. I'm rather excited about the prospect of having a 24/7 medical expert who is both competent AND always in a good mood

Before I discuss the topic in the title, I'd like to mention that I just started a new AI-focused newsletter called “Prompt Engineering”. The goal is to share experiences on how to communicate with large language models like ChatGPT. This is a rapidly evolving space, which is why a newsletter format seems like a great choice. I will share what I'm learning as I use LLMs daily, both personally and professionally.

If that is of interest to you, please subscribe. The new newsletter will also help me to separate a bit more clearly between talking about ChatGPT in the medical field (as I do below), and practical advice (as I will do in the new newsletter).

With that out of the way, let’s look at a recent study on ChatGPT in medicine.

The black-box “problem” in medicine

As a professor working at the intersection of technology and health, I am frequently asked to participate in panels about "the future of health". Inevitably, someone will make a point about the black-box nature of the AI models.

The moderators will ask, "Aren't you concerned about the black-box nature of AI models?"

To which I reply with a counter-question, "Why should I be worried?"

The moderator then explains that without understanding how the AI arrived at its conclusion, it would be difficult to trust its diagnosis.

I then quip, "Oh, you mean like a doctor?"

Although it's a joke, I'm not trying to be snarky. While I understand that doctors are highly trained and have reasoning abilities that current AI models are incapable of, what matters to me is a correct diagnosis and recommendations for treatment.

ChatGPT as better doctors

Last week, a paper published in JAMA asked the following question: Can an artificial intelligence chatbot assistant provide responses to patient questions that are of comparable quality and empathy to those written by physicians? (Emphasis added).

The answer was clear: Oh, yes.

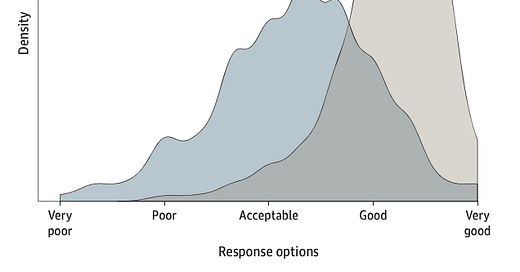

Take a look at these graphs:

Notice how ChatGPT’s answers are almost always good or very good. In contrast, doctors’ responses are rarely very good, most somewhere between acceptable and good, and quite often poor.

The second graph looks at empathy:

No further questions, your honor.

Now, this was a relatively small study in a particular setting. The study looked at 195 randomly drawn patient questions from a social media forum, and the quality and empathy assessments were given by other healthcare professionals, not the patients. However, I think this is just one of many studies that will appear in the coming months, showing just how beneficial ChatGPT can be in the medical domain.

AI for patients

The key point for me is that AI models interfacing directly with patients are going to change medicine in the coming months and years dramatically. For a long time, many of the discussions on AI in medicine have really been about AI for doctors, not AI for patients. The core idea was mostly, "How can AI help doctors in their jobs?"

If your reaction is that doctors will likely not encourage patients to use ChatGPT, you’re most probably right. But in the early days of Google, doctors had serious concerns about “Dr. Google”, and yet, already in 2013, about 3/4 of the US population searched for health information online. Google reports that between 5-10% of all searches are health-related. It’s likely that we’ll soon see similar numbers in the use of LLMs

What I take away from this paper is that most health-related interactions in the future will be with an AI model rather than a person. We will need to ensure that these models are safe and effective, and we do have a very good process in place to establish this, such as the one implemented by the FDA and other similar organizations.

[Here’s the full paper: Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum]